Cross-border financial data remains fragmented, siloed, and difficult to use for proactive risk oversight. With new privacy, AI, and governance rules tightening globally, collaboration between financial institutions has become both necessary and technically complex. Federated learning is a promising framework for overcoming these challenges.

Federated learning is the process of training models across institutions without centralizing data, and is now being piloted for systemic risk analysis, stress testing, and financial crime detection. Recent initiatives like the BIS’s Project Aurora and the DNB’s federated modelling pilot show how this can work in practice.

Here’s what’s changing in financial system analytics, and why federated learning could redefine how we measure and manage systemic risk.

TL;DR

Why Federated Learning Matters

Federated learning directly addresses one of the biggest tensions in finance: the trade-off between collaboration and confidentiality.

| Traditional Approach | Federated Approach |

|---|---|

| Centralizes data in a single repository | Keeps data where it resides |

| High compliance and cybersecurity risk | Privacy-preserving collaboration |

| Limited cross-border feasibility | Cross-jurisdiction cooperation under sovereignty laws |

Recent Proof-of-Concepts and Pilot Projects

BIS Project Aurora (2023)

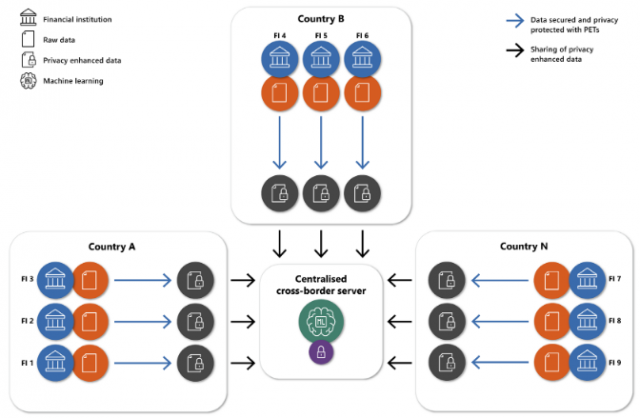

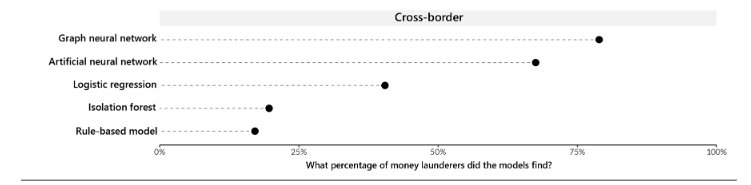

The Bank for International Settlements demonstrated collaborative anti-money-laundering (AML) analytics using FL and network analysis.

“The implementation of a federated learning model enhanced each country’s capability to detect money laundering networks

within its own jurisdiction.” — BIS Project Aurora, 2023

Dutch Central Bank (DNB) Federated Modelling (2025)

Developed a technical proof-of-concept for system-wide stress testing. DNB showed that federated results closely matched those from fully centralized data, proving the feasibility of privacy-preserving macroprudential analytics.

Industry Pilots (Lucinity, Option One Tech)

Financial-crime specialists have applied FL to detect synthetic identities, abnormal loan stacking, and cross-institution fraud patterns. These threats are often invisible in institution-level data.

Together, these initiatives mark a shift from “data sharing” to “model sharing.”

Applications of Federated Learning in Risk Monitoring and Supervisory Technology

Federated learning can enhance supervisory insight across several domains:

- Cross-Institution Risk Assessment:

- Joint credit-scoring or exposure models capturing correlated risk factors.

- Enables early identification of emerging leverage or liquidity stress.

- Joint credit-scoring or exposure models capturing correlated risk factors.

- Fraud and AML Detection:

- Cross-bank collaboration to uncover coordinated illicit activity.

- Sensitive data stays in off-chain channels with only state commitments anchored to the base layer.

- Cross-bank collaboration to uncover coordinated illicit activity.

- System-Wide Stress Testing:

- Central banks and supervisors can simulate shocks while institutions retain control of granular data.

- Aggregated results reveal systemic vulnerabilities, not firm-level secrets.

- Central banks and supervisors can simulate shocks while institutions retain control of granular data.

- Macroprudential Early-Warning Systems:

- Machine-learning models flag non-linear stress indicators or anomalous exposure patterns before they escalate into systemic crises.

What Model Types Work Best

Federated learning is particularly effective for models that gain value from data diversity and structure similarity across participants.

| Model Type | Use in Systemic Risk / Stress Testing |

|---|---|

| Gradient-Boosted Trees (XGBoost, LightGBM) | Credit-risk scoring, default probability aggregation |

| Graph Neural Networks (GNN) | Networked exposures, transaction link analysis |

| Autoencoders / Anomaly Detectors | Non-linear stress detection, leverage buildup alerts |

| Time-Series Models (LSTM, TCN) | Cross-market volatility and liquidity prediction |

These architectures thrive when local data heterogeneity captures different facets of systemic behavior, an ideal use case for federated learning.

From Pilots to Policy

While current deployments remain proof-of-concept, the momentum is clear:

- Regulators are incorporating privacy-preserving analytics into their SupTech and RegTech roadmaps.

- Industry consortia are testing federated frameworks for credit risk, AML, and operational resilience.

- Policy bodies (BIS FSI, OECD, FATF) are evaluating governance standards and interoperability protocols.

To transition from pilots to production, financial institutions will need to address:

- Model governance and explainability across distributed nodes,

- Interoperability standards for data schema and API design, and

- Legal frameworks defining accountability in collaborative analytics.

Federated Learning Meets Financial Stability

As pilots evolve into policy frameworks, attention is turning to how federated learning can strengthen the foundations of financial stability.

By enabling shared model development without data centralization, federated learning offers a pathway for supervisors, central banks, and institutions to jointly detect system wide risks while preserving confidentiality.

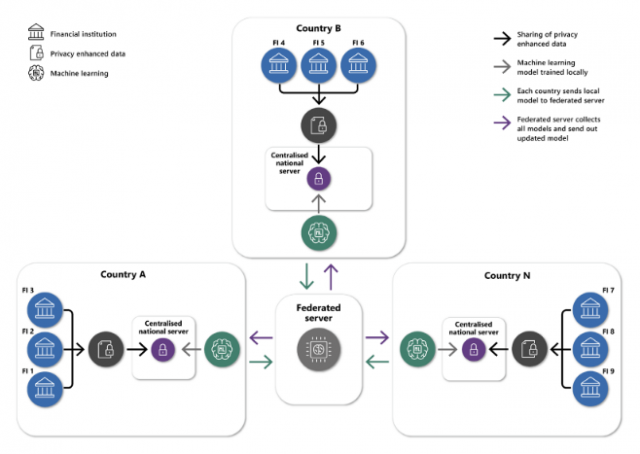

At its core, federated learning transforms the way systemic risk can be monitored. Instead of building one model with one dataset, many participants contribute to a collective intelligence layer, each training locally while the global model integrates insights securely.

This architecture creates a form of privacy-preserving visibility and a shared analytical lens across institutions and jurisdictions, that traditional data sharing frameworks could never achieve.

1. Why Data Fragmentation Has Been a Barrier

The Problem

Financial stability oversight depends on data that’s fragmented across institutions, markets, and jurisdictions.

Different accounting regimes, disclosure formats, and risk taxonomies make it hard for regulators to construct a unified picture of system-wide exposures.

Even when cross-border data sharing is possible, privacy laws and sovereignty constraints limit the extent of integration, especially for sensitive datasets like counterparty positions or client transactions.

As a result, emerging vulnerabilities such as hidden leverage or correlated liquidity shocks often remain undetected until they spread across institutions.

The Solution

Federated learning offers a way to analyze distributed data without moving it.

Each institution trains models locally, sharing only the learned parameters or encrypted weights, not the underlying records.

A central aggregator (for example, a central bank or consortium) combines these updates to produce a global model that captures cross-market patterns of stress and exposure.

This approach satisfies data sovereignty requirements while delivering the collective intelligence needed for system-wide monitoring.

✅ Tip: Start with narrow use-cases (e.g. credit default clustering) before moving to full stress-testing scenarios.

2. Autoencoders as a Foundation for Shared Representations

The Problem

Each institution structures its data differently depending on its jurisdiction, regulatory focus, and market structure.

For example, liquidity ratios and balance-sheet exposures might dominate in one region, while counterparty risk metrics or funding spreads are more relevant in another.

This heterogeneity makes it difficult to build comparable models or detect systemic patterns across institutions.

Without a common representation of risk, cross-market anomalies can remain hidden.

The Solution

Autoencoders help overcome this challenge by learning compact latent spaces that capture the essential relationships within each institution’s data.

Each institution trains a local autoencoder on its proprietary data, learning a latent representation that captures the essential structure of its internal risk dynamics, essentially a compressed “fingerprint” of its balance sheet behavior.

In a federated setup, only the encoder weights or latent vectors are securely aggregated, protected by Differential Privacy (DP) or Secure Multi-Party Computation (SMPC).

The global model forms a shared manifold (a common latent space) where institutions’ risk profiles can be compared without revealing their raw inputs.

Even though the data remains private, identifiability emerges at the system level. When several institutions’ latent vectors move in sync, the global model can detect co-movement, leverage buildup, or systemic stress clusters providing signals about areas that warrant closer supervisory attention.

Regulators can then use these signals to guide investigations or initiate MMoUs to investigate further and coordinate supervisory action.

✅ Tip: Use layer-wise aggregation rather than full model averaging to preserve local data semantics.

3. Detecting Anomalies and Stress Points

The Problem

Traditional stress tests rely on predefined scenarios and static assumptions.

They perform well for known risks such as interest-rate shocks or sectoral defaults, but struggle to detect unknown unknowns that emerge dynamically, like correlated funding stress, liquidity spirals, or contagion through non-linear exposures.

Moreover, when each institution monitors anomalies in isolation, systemic patterns remain invisible until it’s too late.

The absence of real-time, cross-institution anomaly detection leaves regulators reactive rather than proactive.

The Solution

Federated anomaly-detection frameworks introduce an adaptive, privacy-preserving way to surface early warning signals across the financial system.

Each institution uses unsupervised models such as variational autoencoders (VAEs), isolation forests, or one-class SVMs to detect irregular behavior in its own data streams.

Local anomaly scores or compressed representations are then securely aggregated, revealing co-movements in stress indicators across multiple institutions without exposing underlying data.

When several entities begin to drift simultaneously from their baselines, the global model identifies this as a potential systemic anomaly worthy of supervisory attention.

Because computation and scoring remain local, the approach satisfies both data-sovereignty and confidentiality requirements.

Combined with federated explainability methods like SHAP or LIME applied to local models, supervisors can interpret anomalies, trace their macro-drivers, and respond before risks propagate.

✅ Tip: Integrate federated anomaly scores into existing macroprudential dashboards as a complementary risk layer.

Bringing It All Together

Taken together, these approaches outline a clear evolution in systemic-risk analytics.

Federated learning transforms fragmented, jurisdiction specific data into a cohesive intelligence layer, where institutions retain control of their information but contribute to a shared understanding of system-wide stability.

Autoencoders provide the common representational framework that links heterogeneous datasets, and federated anomaly detection adds the real-time vigilance needed for early warning.

Together, they move financial supervision beyond static reporting toward a model of continuous, privacy-preserving systemic oversight.

These developments signal a pivotal shift from data collection to collaborative intelligence, laying the groundwork for the next generation of SupTech and macroprudential surveillance.

“Federated learning transforms financial oversight from static data reporting to dynamic, privacy-preserving collaboration.”

Final Thoughts

Federated learning is emerging as a cornerstone of the next generation of data governance and systemic-risk infrastructure.

By allowing models, not raw data, to move across institutions, it resolves the long-standing tension between confidentiality and collaboration that has constrained cross-market analytics for decades.

As projects like BIS’s Aurora and DNB’s federated modelling advance, the emphasis will increasingly shift from proof-of-concept to operationalization and policy integration, embedding privacy-preserving analytics into the heart of financial supervision.

For regulators, the opportunity is clear: build continuous, privacy-aware monitoring frameworks that detect vulnerabilities as they form rather than after they spread.

For institutions, federated learning provides a compliant pathway to collective intelligence, strengthening both local and systemic resilience.

Stay Connected

Federated learning is just one part of the broader shift toward privacy-preserving financial intelligence.

If you’d like to explore how these techniques are reshaping supervision and systemic-risk monitoring, dive into our related pieces on Synthetic Data in Financial Services.

We’re also preparing a Data Sense White Paper featuring concrete architectures, implementation examples, and pilot insights on federated learning for systemic-risk detection.

Subscribe or follow Data Sense to be notified when it’s released and join the discussion on how privacy-preserving analytics can strengthen financial stability.

About Data Sense

Data Sense publishes insights on data automation, analytics, and applied AI for financial institutions and regulators. We translate technical advances into actionable business understanding. Contact us here to learn more.